Why Radiology AI Didn’t Work and What Comes Next

Get Out-Of-Pocket in your email

Looking to hire the best talent in healthcare? Check out the OOP Talent Collective - where vetted candidates are looking for their next gig. Learn more here or check it out yourself.

Hire from the Out-Of-Pocket talent collective

Hire from the Out-Of-Pocket talent collectiveHealthcare 101 Crash Course

%2520(1).gif)

Featured Jobs

Finance Associate - Spark Advisors

- Spark Advisors helps seniors enroll in Medicare and understand their benefits by monitoring coverage, figuring out the right benefits, and deal with insurance issues. They're hiring a finance associate.

- firsthand is building technology and services to dramatically change the lives of those with serious mental illness who have fallen through the gaps in the safety net. They are hiring a data engineer to build first of its kind infrastructure to empower their peer-led care team.

- J2 Health brings together best in class data and purpose built software to enable healthcare organizations to optimize provider network performance. They're hiring a data scientist.

Looking for a job in health tech? Check out the other awesome healthcare jobs on the job board + give your preferences to get alerted to new postings.

“What happened to those AI Radiology companies?”

I’ve gotten a lot of questions about AI in Radiology. I’m not a radiologist, an AI expert, and have gotten 2 scans done in my life. So I feel qualified to give my opinion.

Jk, but we’ve been having some very lively chats in the OOP slack about this. So I thought I’d start bringing in some perspectives on this problem into the newsletter.

Today I’m very excited to have a guest post from Ayushi Sinha who worked at Nines, a company that was applying AI to radiology.

In part 1 today, we'll go through the problems that wave 1 AI radiology companies dealt with. In the next part, we’ll talk about some of the different strategies being tried today in AI Radiology. Make sure to sign up for the newsletter to get part 2.

And by we I mean her, I’m merely the meme maker for this post.

I worked at Nines, An OG AI Radiology Startup

From Ayushi Sinha

My first job was a product manager at Nines. When I signed my contract in 2019, Nines was a point solution, attempting to sell our FDA-approved computer vision models to hospitals. By the time I started full time in 2020, we had pivoted to an AI-enabled teleradiology practice. Our customers were health systems like UPMC and Yale to serve 400,000 patients on an annualized basis.

But ultimately, we were quietly acquired. It felt like folding; we had fallen short of our lofty ambitions. And we weren’t alone.

Over the last decade, dozens of radiology AI startups have come and gone. Zebra. Aidoc. Qure.ai. Nines. A few remain. Many others have pivoted, wound down, or been quietly folded into teleradiology platforms.

So what happened? Why didn’t radiology, one of the most data-rich, image-heavy, and overburdened fields in medicine, embrace AI the way it seemed destined to?

This essay is my attempt to answer that. It’s part postmortem, part roadmap. And, hopefully, a guide for the next wave. So what were the problems?

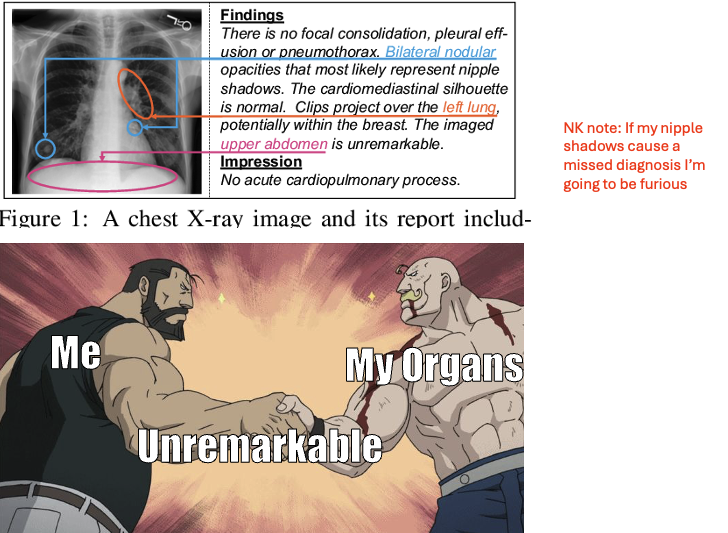

Problem 1: Medical AI Struggles with Hedge Language and Documentation Norms

Unlike coding or mathematics, medicine rarely deals in absolutes. Clinical documentation, especially in radiology, is filled with hedge language — phrases like “cannot rule out,” “may represent,” or “follow-up recommended for correlation.” These aren’t careless ambiguities; they’re defensive signals, shaped by decades of legal precedent and diagnostic uncertainty.

When our team trained NLP models to identify whether radiology reports recommended a follow-up scan, we encountered a striking phenomenon: nearly every report recommended a follow-up, regardless of actual clinical necessity. Why? Because radiologists have internalized a medico-legal truth. It’s safer to suggest a follow-up than risk being sued for missing something rare.

For AI, this is a labeling nightmare. Unlike doctors who interpret language in context, AI learns from patterns in text. If hedge language is ubiquitous, the model will overpredict follow-ups, degrading both specificity and clinical utility. Without carefully curated labels and a deeper understanding of the intent behind the language, AI systems will inherit human caution, but not human judgment.

{{interlude 1}}

Finally - if you want to get in front of people building with AI tools in healthcare, let’s talk about hackathon sponsorship for our upcoming NY AI hackathon. It’s an awesome group of builders + people will see real-world builds using your tool.

Back to Ayushi.

Problem 2: Accuracy Isn’t Binary. It Breaks Down at the Long Tail

Machine learning models are excellent for 90% of cases. But it’s the long tail of edge cases that determines whether these systems are safe and clinically useful.

Consider the historical example of Leon Trotsky. After fleeing the Soviet Union, he was assassinated in Mexico City with an ice pick to the head. Ask yourself: if that patient presented to a hospital today, would an AI model trained on conventional trauma datasets flag “ice pick injury to the brain”?

It’s highly unlikely that even the most robust radiology models have seen a labeled instance of penetrating cranial trauma from an ice pick. But a human radiologist, even without prior exposure to that specific example, would likely recognize the injury’s implications and triage accordingly.

This illustrates a key point: general intelligence allows humans to reason across unseen cases. AI models, unless specifically designed with broader reasoning capabilities or trained on sufficiently diverse datasets, struggle at the margins.

The technical challenge is twofold:

- How do we improve model coverage at the tail without incurring prohibitive annotation costs?

- Can we combine automated systems with human-in-the-loop supervision to address the rare but dangerous edge cases?

Therefore, the first wave of radiology AI companies focused, obsessively, on accuracy. Our startup was even named Nines — a nod to the pursuit of 99.999999% precision. It made sense. Deep learning had just proven itself in ImageNet. In 2016, Hinton declared that we should “stop training radiologists.” The FDA, newly receptive to software-based devices, offered a visible regulatory pathway. So startups competed on metrics: sensitivity, specificity, AUCs. However, accuracy is necessary, but not sufficient.

Problem 3: No One Buys Point Solutions

There was a real tradeoff in Wave 1: you could build more accurate, explainable, and clinically responsible AI by focusing on narrow use cases. That’s what many of us did.

At Nines, we had FDA-cleared models for detecting brain hemorrhages and measuring lung nodules. Each was technically rigorous. Clinically validated. And most importantly, built around real physician pain points.

Take our brain hemorrhage tool.

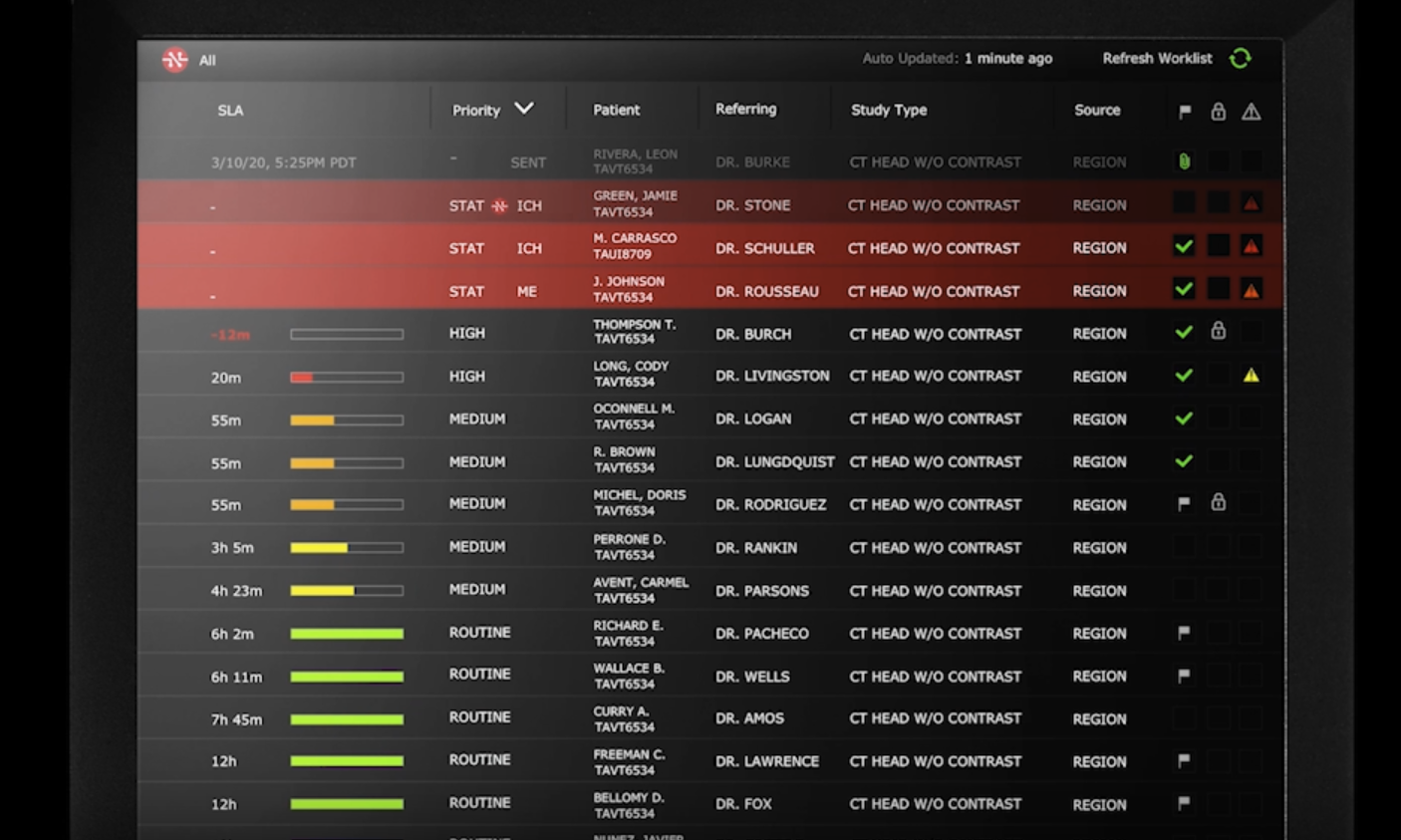

We spent months interviewing radiologists and emergency room physicians, trying to find a problem where AI could meaningfully help. The need we uncovered wasn’t subtle. It was life or death. In a busy emergency department, radiology scans pile up. A patient with a deadly intracranial bleed might be sitting in the queue behind someone with a routine headache. By the time a radiologist reads the scan, it can be too late.

Our model was designed to prioritize brain hemorrhage patients automatically, flagging suspected hemorrhages in minutes, pushing them to the top of the queue, and alerting the care team before anyone even opened the case. It was a tool that served both a clinical and operational need.

But here’s the problem: technical elegance is orthogonal to business viability.

Hospitals and radiology groups don’t want to contract with 10 vendors for 10 narrow AI tools. IT teams don’t want to manage 10 separate integrations. And procurement teams certainly don’t want to negotiate 10 separate pricing models, especially when none of them solve a full workflow. Even when the models were accurate, they didn’t fit.

The reality is: no one buys point solutions in healthcare.

Problem 4: The Business Model Wasn’t Clear

At Nines, we had FDA-cleared models ready to deploy, but no clear path to reimbursement. Hospitals liked our tech. Radiologists saw the value. But without a billing code, there was no financial incentive to adopt. We were stuck trying to justify ROI on speed, triage, or “soft savings,” arguments that never closed a procurement cycle.

So nearly every Radiology AI startup, including ours, pursued a software-as-a-service (SaaS) business model. At the time, recurring revenue was considered essential for investor interest, and SaaS offered a clear, scalable story for venture capital. However, this model proved misaligned with how healthcare systems purchase and extract value.

Radiology AI tools were frequently priced as standalone software products, even though they were delivering value by improving clinical throughput, turnaround times, and operational efficiency. All of which are tied to service delivery, not software usage. As a result, we were capped in what we could charge, despite generating significant downstream impact.

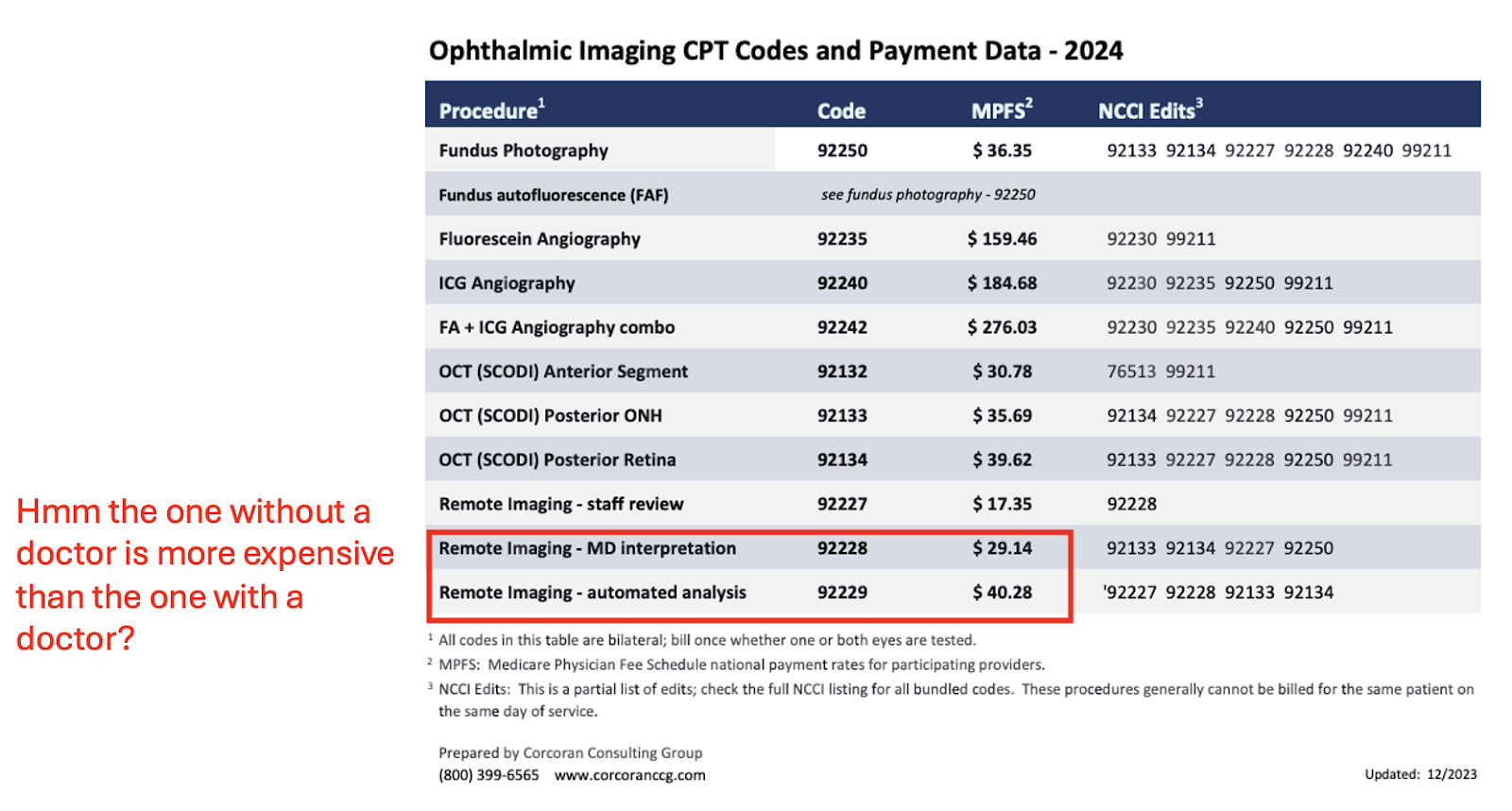

Without direct billing mechanisms or CPT reimbursement codes, it was difficult to monetize the outcomes these tools enabled. Selling software alone meant capturing only a fraction of the value AI actually created.

Ultimately, we were offering tools, not outcomes. And hospitals, rightly, were unwilling to pay for potential unless it came bundled with performance.

[NK note: I’ve talked in the past about Autonomous AI CPT codes are actually charging more than human counterparts, and some thoughts around pricing/reimbursement here]

Problem 5: Being First Didn’t Help

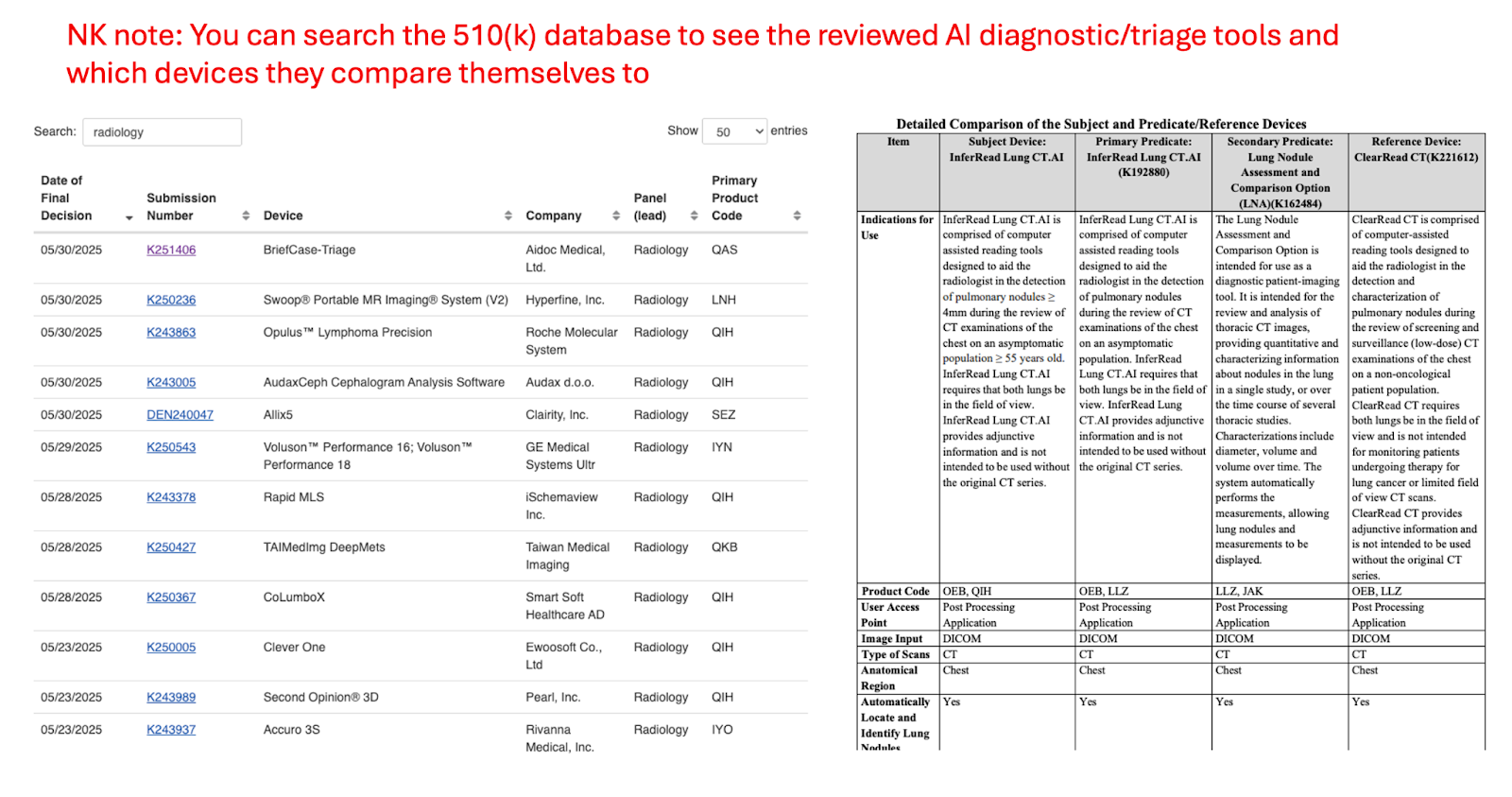

One of the cruel ironies of Wave 1 is that first movers paid the regulatory price and got none of the competitive advantage. Wave 1 had to submit De Novo FDA applications. We ran costly reader studies. We hired regulatory consultants, validated pipelines, and navigated Class II device regulations from scratch.

Paving the way was the necessary thing, but adoption didn't catch up in time to provide ROI. The upside is that Wave 2 is now ready and stands on the shoulders of this effort by submitting 510(k)s using our models as predicates. They build lighter tools with human-in-the-loop UX that avoid regulation altogether. They move faster. Cheaper. And often with clearer guidance.

There’s a bit of a first mover disadvantage here, especially as the FDA was figuring out how to regulate AI diagnostic tools like ours. Future companies benefit from having more predicate devices to point to and more clear pathways for approval (e.g. the De Novo 510 (k)). We’ll talk more about this in part 2.

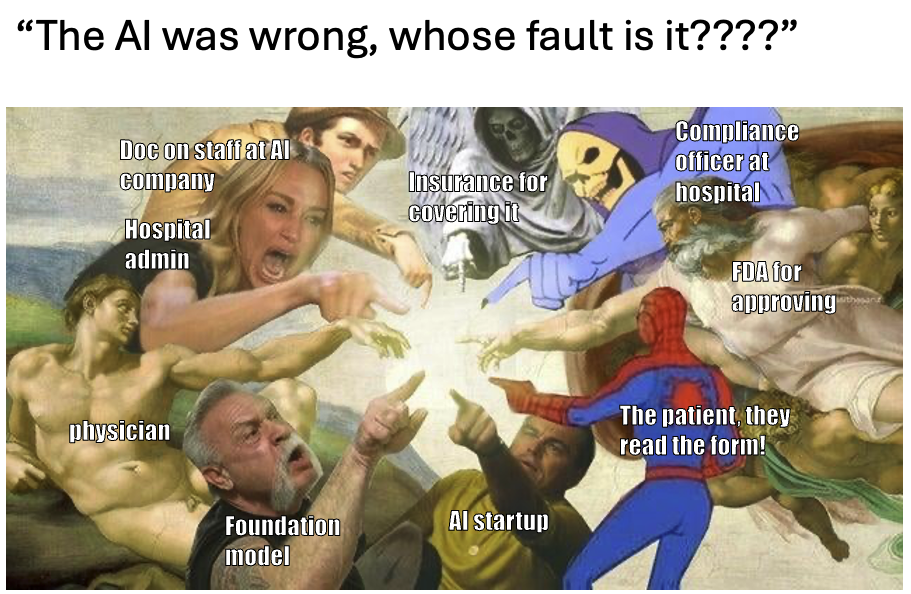

Problem 6: Liability Remains Murky

In traditional clinical care, errors fall under the domain of medical malpractice.

If a radiologist misreads a scan, the patient or family can seek legal recourse. Doctors carry insurance. They can be sanctioned. They can lose their license. But what happens when an AI model makes the error?

- Is the hospital liable for deploying the model?

- Is the radiologist liable for trusting the output?

- Is the startup that built the model accountable?

- And if no individual can be blamed, can anyone be held responsible?

As AI becomes more embedded in clinical workflows, we must establish clearer liability frameworks. Without them, clinicians may resist adoption, not because they distrust the technology but because they fear bearing the risk without reaping the reward.

Consider these two cases:

- False negative: If a radiologist ignores the AI interpretation in favor of their own best judgment, and it’s a false negative, then is it the Radiologist’s liable for ignoring the AI suggestion?

- False positive: If a radiologist ignores the AI interpretation in favor of their own best judgment, and it’s a false positive, then should the Radiologist’s get credit for ignoring the AI suggestion?

How should we structure responsibility to prevent radiologists from blindly accepting the model for both cases, even if it produces worse care in the false positive case?

Problem 7: FDA Holds AI to a Higher Standard Than Human Radiologists

There’s a profound regulatory asymmetry in healthcare AI. The average diagnostic accuracy of a board-certified radiologist varies between 70–95%, depending on the task (e.g. musculoskeletal). Errors are expected, managed, and litigated within the system of medical malpractice.

By contrast, AI systems submitted for FDA approval must meet rigorous standards across specific, predefined tasks. For example, an algorithm designed to aid in lung cancer detection must demonstrate not just general performance, but specific accuracy for:

- Nodules >3 mm in diameter

- Growth greater than 20% between studies

- High-risk location or density changes

A human radiologist can miss a nodule and still fall within accepted error margins, but an AI system is held to stricter standards. It must demonstrate minimum performance across every subtype or face rejection. On top of that, each clinical indication requires its own FDA clearance. In effect, the regulatory threshold for AI is stricter than for the clinicians it supports.

This raises a critical question: if AI must be more accurate than humans to be approved, what incentive remains for deployment, especially if reimbursement is still uncertain?

Conclusion and parting thoughts

So what’s changed? Why might Wave 2 companies succeed where Wave 1 didn’t?

The tech stack is more mature, bringing down model development costs. The business models are better and capture more value. Distribution and workflow are the focus. And the funding environment is more flexible.

Part 2 will dive deeper into why I’m optimistic about Wave 2 of AI Radiology.

[NK note: Sounds pretty rad! I’m sorry. Sign up here to get part 2 when it comes out. I’m sorry again.

This answered a lot of questions I had, and if you worked on Radiology AI I’m curious if this was your experience as well.]

Thinksquad out,

Nikhil aka. “Didn’t go to med school or become a radiologist because AI was going to take my job anyway” and Ayushi aka. “Stressed to the Nines”

Ayushi: This piece wouldn’t have been possible without insights from conversations with radiologists, ML engineers, healthcare operators, and my teammates at Nines. Thank you to Nikhil, Jan, Jaymo, Rebecca, Nand, Laura, Leora, Zach, and my family for providing feedback.

Twitter: @nikillinit

IG: @outofpockethealth

Other posts: outofpocket.health/posts

{{sub-form}}

If you’re enjoying the newsletter, do me a solid and shoot this over to a friend or healthcare slack channel and tell them to sign up. The line between unemployment and founder of a startup is traction and whether your parents believe you have a job.

Healthcare 101 Starts soon!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 Starts soon!!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 starts soon!!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 starts soon!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Interlude - Our 3 Events + LLMs in healthcare

See All Courses →We have 3 events this fall.

Data Camp sponsorships are already sold out! We have room for a handful of sponsors for our B2B Hackathon & for our OPS Conference both of which already have a full house of attendees.

If you want to connect with a packed, engaged healthcare audience, email sales@outofpocket.health for more details.