Turning Conversations Into Documentation Automagically 🪄 with Abridge

Get Out-Of-Pocket in your email

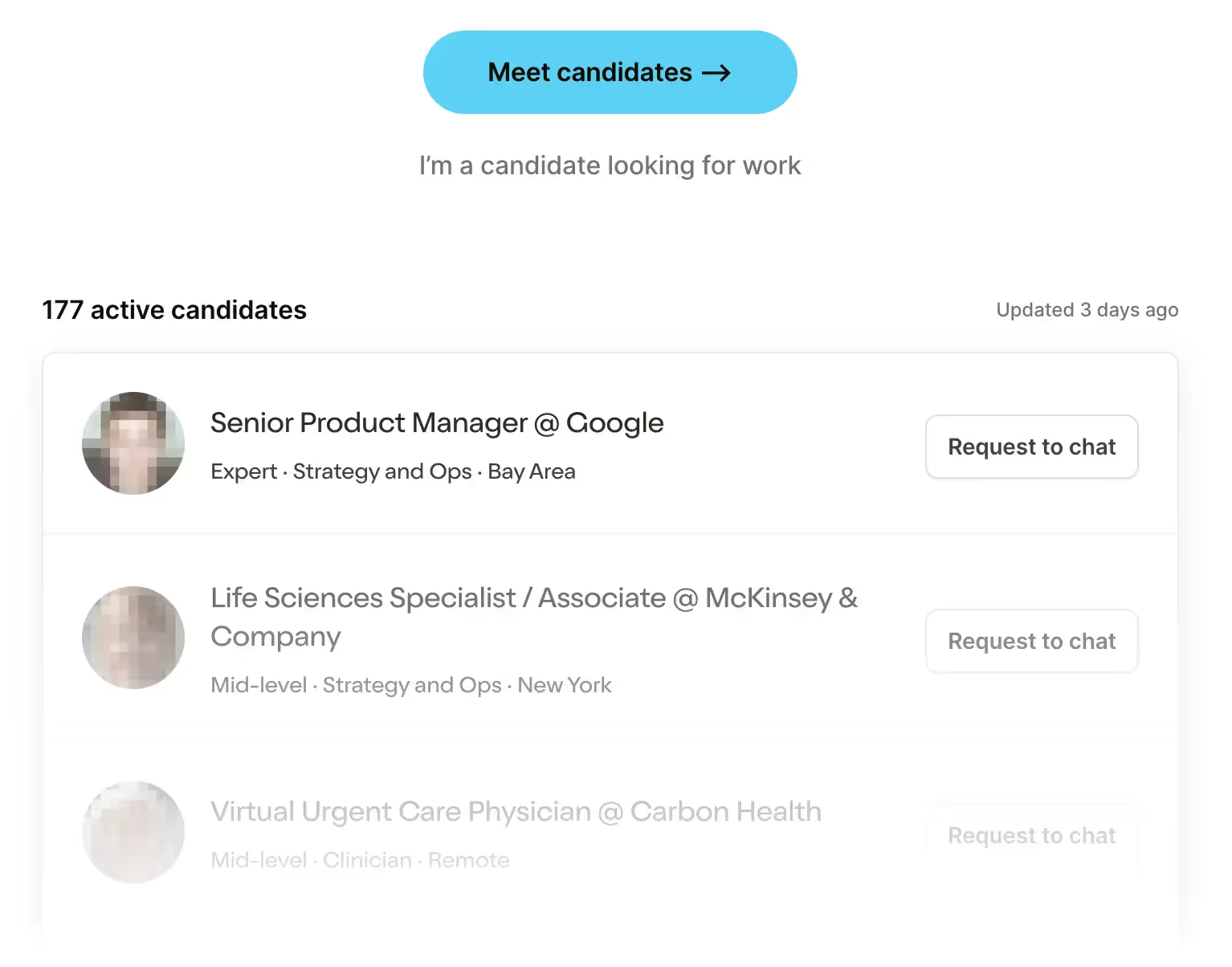

Looking to hire the best talent in healthcare? Check out the OOP Talent Collective - where vetted candidates are looking for their next gig. Learn more here or check it out yourself.

Hire from the Out-Of-Pocket talent collective

Hire from the Out-Of-Pocket talent collectiveIntro to Revenue Cycle Management: Fundamentals for Digital Health

Featured Jobs

Finance Associate - Spark Advisors

- Spark Advisors helps seniors enroll in Medicare and understand their benefits by monitoring coverage, figuring out the right benefits, and deal with insurance issues. They're hiring a finance associate.

- firsthand is building technology and services to dramatically change the lives of those with serious mental illness who have fallen through the gaps in the safety net. They are hiring a data engineer to build first of its kind infrastructure to empower their peer-led care team.

- J2 Health brings together best in class data and purpose built software to enable healthcare organizations to optimize provider network performance. They're hiring a data scientist.

Looking for a job in health tech? Check out the other awesome healthcare jobs on the job board + give your preferences to get alerted to new postings.

TL:DR

Abridge structures and summarizes doctor-patient visits from ambient conversations. Their AI transforms conversations in different ways, whether it’s clinically useful and billable documentation for doctors, structured data and analytics for coding and risk assessment, or a digestible care plan for patients. They’ll have to overcome competition, hesitance in recording, and the last mile transcription problem to be successful.

If you’re an enterprise looking for a way to document and structure information from medical conversations, contact Abridge and they’ll talk to you about the different ways they can help your business.

If you’re an individual clinician, Abridge is giving the first 20 OOP readers a free month to give it a run. Sign up here. (The doctor solution is very different from the consumer app. More on that later…)

This is a sponsored post - you can read more about my rules/thoughts on sponsored posts here. If you’re interested in having a sponsored post done, email nikhil@outofpocket.health.

Company Name - Abridge

Abridge is building machine learning centered technology and workflows that turn conversations into structured documentation for providers and patients.

The company is named after what readers wish I would do with this newsletter but I NEVER will. Long form until I die!!!! Also where’s the “health” in the name? Someone tell me if it’s legal to have a healthcare startup without health in the name, seems wrong.

Abridge was started by Dr. Shiv Rao after Shiv got carpal tunnel writing a discharge note and screamed “never again” to the Heavens. They’ve since raised $27.5M from Union Square Ventures, Bessemer Venture Partners, Wittington Ventures, Pillar Venture Capital, UPMC Enterprises, Yoshua Bengio, and Whistler Capital.

What pain points do they solve?

Today, the way important information in healthcare gets disseminated is usually through a conversation and then the gist is summarized into a document later. For example:

- You have a visit with your doctor where you tell them what’s wrong. During the visit they may jot down some notes as you’re talking or they may not take any notes at all. And at the end of the day (or later that week) they’ll finish their notes while trying to recall the details of the conversation – either from that chicken scratch or from memory.

- You’re discharged from the hospital after a diagnosis you weren’t expecting, and in your shell shocked state you’re given a bunch of instructions on meds to take or next steps.

- Pharma companies and health plans have call centers with nurses that reach out to patients for all sorts of reasons, from making sure patients are taking their specialty medications correctly, to helping them navigate where to get care, etc.

There’s a bunch of issues with the fact that we rely on a system where people need to remember conversations for documentation purposes later.

- We’re dependent on the person documenting to remember everything. How good is your memory at the end of the day? Because I literally don’t remember things mid sentence (that’s everyone…right? right?). As a result you see lots of inaccuracies or missing information in the health record.

- Not only doctors, but patients need to remember a lot of the details from a visit around next steps, what to avoid, etc. at a time when they might be overwhelmed by a new diagnosis or aren’t feeling well. You can tell me whatever you want, just know I’m going to be sending you a MyChart message later to double check.

- EMR documentation is meant for billing so it focuses on capturing the details meant for billing. Then we try to manipulate it for other uses like continuity of care, real-world evidence, etc. This is one of the reasons EMR data sucks – there’s a lot of data loss that happens between the visit and the documentation.

- The visit becomes focused on documenting, so doctors spend a lot of time looking at EHRs while simultaneously verbally communicating with the patient. This can make the doctor seem distracted and make patients feel unheard. Here’s a kind of nutty paper which tracked eye gazes from patients and doctors in 100 primary care visits and found that doctors looked at patients 46.5% of the time and their EHRs 30.7% of the time. 1% of time they’re looking into the abyss.

- As a result of bad tooling and documentation burdens, doctors are really burnt out. This is increasingly getting worse as more needs to get documented per patient + clinical staff starts leaving medicine. Doctors used to go to patients’ homes, get to know their families and history, and then give cocaine regardless of the ailment. Now, they just chart a lot which is a lot less fulfilling.

What does the company do?

Abridge utilizes natural language processing and machine learning to turn health conversations into clinical documentation. For providers, Abridge not only transcribes the conversation but generates summaries of the conversation and structures it into the archetypal SOAP note that providers can quickly review and approve in their EMR. For the uninitiated, SOAP notes are a template to structure and document the patient’s story and the provider’s assessment + plan.

The secret sauce to Abridge is in its machine learning models – Abridge is powered by a proprietary dataset derived from more than 1.5 million medical encounters. They told me how they got it but I ain’t no snitch (some hints later on…).

I’m not smart enough to understand how it works. But even if you don’t get it, Abridge can point to evidence in the raw conversation for any given AI-generated summary so you can see where it comes from. It creates a great experience for the user without needing to fully understand the underlying process. Just like microwaves (don’t lie you don’t get how they work either).

They do have a lot of cool papers (all peer-reviewed! whee) if you want to see under the hood how their ML works on individual tasks, all of which together power the general Abridge models. For example, how do you know when a given word in a conversation is important? How do you use the rest of the patient context to understand why a given word like “diagnosis” was said? How do you convert those important words into some form of summarized report? Plus you need to deal with the problems of healthcare specific syntax, colloquial phrases, people talking at the same time, referencing older parts of the conversation, your parents divorce, etc.

Seeing how ML tackles different tasks like identifying medication regimens to detecting errors makes you realize how much needs to go on behind the scenes to build a product that looks simple in the front-end.

It’s worth highlighting how this compares to existing dictation/transcription services too.

- Abridge doesn’t need wake words or require doctors to speak in a specific way. It captures the conversation as it happens.

- It’s tech vs. a human scribe doing the documentation. This also makes it easier to roll it out across an entire organization since it’s not constrained by staffing or cost.

- Generating that documentation happens in real time vs. waiting for a person to transcribe it. You’ll still be in the exam room debating ordering the immediate Lyft or the “wait 15 minutes” Lyft by the time the summary comes out.

- It works across any channel (e.g. phone, telemedicine, in-person, the 4th dimension) and any specialty.

What is the business model and who is the end user?

The company sells to enterprises of all different kinds, and the pricing plans are based on usage and what product features are required.

Some examples of types of customers/end users:

- Hospital systems - Hospitals can implement Abridge to make it easier for their doctors to document a visit while also reducing burnout. Doctors can record a visit, receive structured documentation, and have it pushed into the EMR in real-time. They can then review the notes later with a recording ready for parts they need to recall. Capturing the raw audio is also useful for documentation necessary for billing, and coders can use the transcribed visits to create a claim.

- Payers - Health insurance carriers with Medicare Advantage plans want to have accurate documentation for risk-adjustment purposes. They can give Abridge to provider groups they own or work closely with to make this easier. This can both capture the raw audio file which can extract a diagnosis and then map it to a Hierarchical Condition Category (HCC) or ICD-10 code which are used to understand how sick a patient is and whether the health plan should get a bonus.

- Care coordination companies and call centers - In addition to in-person visits, Abridge works with audio from phone calls and chat transcripts. If you’re a call center or a care coordination company where you’re trying to make sure that the patient knows the next steps, Abridge captures key info and provides it to the patient + their physician that want to ensure continuity of care.

Job Openings

Abridge is hiring for a bunch of jobs, but particularly engineers (who isn’t?)

Machine Learning

Engineering

They’re hiring for other roles as well, including one that says “wildcard” where you can create your own role lol. I submitted my application for hired muscle, but I don’t meet the qualifications despite setting them myself.

___

Out-Of-Pocket Take

Abridge is pretty nifty, and there’s a few specific things I like about it.

Capture of raw data - Having the raw data on hand is really useful because you can then customize the data structure and output for your needs vs. relying on what’s written down in the EMR. From the same conversation you can turn the data into documentation for an insurance claim, a discharge note to be read by the patient, and potentially de-identify data for real-world evidence studies all derived from the same original source data. Beyond that, there could be use cases we think of later down the road and we can go back to this same raw dataset to run an entirely new analysis on it. Maybe patients are all mentioning some random side effect of a drug which the doctor thinks is totally unrelated because it’s mild or not listed on the label so they don’t document it.

Using documentation only would make it hard to do a retrospective analysis, but with raw data it’s much easier. This can potentially highlight and flag public health issues proactively instead of requiring a person to notice a trend and then run an analysis to confirm using data that may not have been intended for that kind of analysis.

Auditability - Having an auditable log of healthcare conversations that are available to both physicians and patients is a powerful concept.

- If there are any malpractice issues it’s easier to go back to the record vs. rely on recounting the perspectives from both parties.

- It can be helpful to bring a conversation to another doctor (second opinion or another person on your care team) to hear the context in which something was said. This can be especially useful when thinking about giving tasks to different members of a care team with different levels of credentials - there can be a higher level of confidence shifting tasks away to other members of the team knowing you can listen to the raw audio if you need to.

- This can potentially improve doctor-patient conversations by having clinicians relisten to the record. This is common in the sales world and other external facing roles that have some coaching aspect. Having records for clinicians to review themselves or with mentors could help them improve certain things like bedside manner, process for diagnosing, delivering news to patients, etc.

New interfaces for documentation - I mentioned this in the Future of EHRs report, but even though I think it’s unlikely existing EHRs go away I think it’s possible that you’ll see new interfaces that clinicians interact with which abstract EHRs into the background. Voice is definitely a contender here. Clinicians can interact with a voice + dashboard instead of the drop down menus of EHRs. Hopefully this should reduce documentation burden for clinicians while still keeping the same EHR infrastructure which probably won’t get overhauled.

I think the real interesting part becomes a new interface for patients to interact with. Let’s be honest, the EHR patient portals are terrible to interact with and super confusing to try and make sense of. On the patient side, Abridge can generate a custom summary that changes depending on the level of nuance and sophistication of the patient (e.g. their reading level, language, understanding of medical information). And if the summary isn’t good enough, the patient or caregiver can see where in the raw audio the relevant information was and re-listen to it.

Plus there’s something interesting about an interface that can potentially surface relevant information in real-time during the visit based on what’s being said. For example if your doctor is saying you need to get an MRI, it could be helpful to surface that there’s a cheaper MRI a few blocks away vs. the one in the hospital that’s 10x the cost. Or if a physician tells you that you’re going to need a drug, it could surface your pharmacy benefits to know if that drug is covered or if you should talk with your doctor about an alternative while you’re in the office. Or if they tell you that you need to try and get some sleep, it can pull up Out-Of-Pocket.

Patient usability + bottoms-up adoption - Healthcare historically has not had a lot of bottoms-up adoption success stories where people absolutely love the product and then it forces enterprises to get on board. This has been a common growth and sales motion in tech, with companies like Slack or Notion getting users to love them and eventually moving into selling to companies. Abridge started with this route initially, generating a lot of consumer interest with over 200,000 patients using an app to help them record the details of their care plans with permission.

They have a pretty smart tagging mechanism which lets them tag their own doctor even if they aren’t on Abridge, or let’s doctors tag other doctors who they want to see a report. If the doctor loves it too, they’ll adopt it as well (Abridge is already being used by over 2,000 clinicians across the country). And if enough doctors love it, the enterprise/health system starts getting interested too. Theoretically each one of those stakeholders has a different reason for liking the tool. Abridge is set up to enable providers to automatically send summaries to their patients, enterprises to make billing and documentation better, and ultimately help people understand and follow through on their care plan. Doctors can say things once, and then Abridge automatically reminds their patients of the care plan and other key moments from the conversation.

I’m a big believer that product-focused companies delivering great experiences are going to win the next wave of healthcare over sales-driven companies that force users to adopt tools. But I’m also a big believer that cheese should be cut into cubes for pasta instead of grated, so my opinions are questionable.

–

As with any company, there are lots of potential obstacles between here and Abridge’s grand vision.

Competition - The voice-to-text, NLP, and EMR data extraction race in healthcare is red hot right now. For example, Microsoft just made a massive acquisition here. In general the approaches to this problem seem to be building either voice-to-text transcription, using scribes in some capacity, building healthcare specific natural language processing, or building collaboration tools.

Abridge’s bet is that it can do all of those things at once AND do it before it even gets into the EMR. By creating this product that works upstream of the EMR it can theoretically more easily integrate into new EMRs/workflows and manipulate the data for new use cases like we talked about earlier. If a company has to get access to the EMR data before it can work, then it’s hard to show value upfront + each new customer will require a deep integration. Abridge needs the integration to push the data into the EMR, but it can generate those reports and summaries out of the box.

This seems to be a classic race of whether incumbent companies that already have legacy distribution and use a lot of humans to do transcription and summarization can build this new tech faster than Abridge can get distribution. Those deep integrations are hard to do, but make the existing incumbents very sticky and ingrained in the workflows of large health systems. This is one of the reasons I’m particularly interested in their clinician focused, bottoms-up adoption strategy - because it makes it easier for the frontline staff, not just the back-office.

The “last mile” of transcription and parsing - The papers Abridge publishes give a sense of the problems some NLP models face. For example, here’s an excerpt from their paper about predicting noteworthy snippets of a healthcare conversation.

“The cardiovascular system has 152 symptoms in our dataset, including ‘pain in the ribs’, ‘palpitations’, ‘increased heart rate’ and ‘chest ache’. A learning-based model must learn to associate all of these symptoms with the cardiovascular system in addition to recognizing whether or not any given patient experiences the symptom.”

If a system for transcribing has enough errors that it requires the original person to go through the text with a fine-tooth comb to make sure there weren’t errors, then time wasn’t really saved and it’s basically an equal headache as regular documentation. Bringing the accuracy up high enough that it only requires minimal fixes after recording is a must.

No solution on the market is perfect yet. Abridge claims its AI captures 92% of the relevant next steps and plans items from a conversation. It’s unrealistic to expect every ML model is going to be 100% great, so I respect companies that put their learnings out there for people to see.

One thing I like about Abridge is that because it owns the workflow of creating a document out of the visit, it can flag areas where it wants the physician to review because it doesn’t have high confidence. This process also helps the algorithm improve in the future as well.

Willingness to record - Abridge does require some behavior change for both patients and doctors who need to consent and have a willingness to be recorded. Doctors could feel uncomfortable that they can’t give their “honest” assessment if they’re being recorded. Or any user might worry about how data is stored and who gets access since the conversation data is intimate. Abridge has a long but very easily readable page dedicated to its privacy policy and how data is used, which you can read in full here.

It’ll be interesting to see if this behavior changes, but there’s a good chance that the administrative benefits of reduced documentation + having an easy to access history will outweigh concerns here. Also, many doctors use scribes who are watching the visit happen so they clearly aren’t opposed to a third-party seeing the visit happen in general.

Conclusion

I’ve been messing around with Abridge for a few years now and it’s been cool to see how much better the tech has gotten over time. Shiv the CEO has pretended to diagnose me with every condition under the sun to test if the software can pick it up, but also to see how I’d react to being told I’m pregnant.

Historically, the adoption of cool new software in healthcare has been… less than great. But voice + NLP might actually be the perfect wedge here because of how much information is shared in a conversation, how bad the documentation burden has become, and how many different use cases it can be applied to.

Give it a spin for yourself. If you’re an individual clinician, Abridge is giving the first 20 OOP readers a free month to give it a run. Sign up for it here.

If you’re an enterprise looking to implement this in your workflows, contact Abridge. I’m sure you’ll find a use case it could help with.

Thinkboi out,

Nikhil aka. “Ask me about my other hot food takes”

Twitter: @nikillinit

Other posts: outofpocket.health/posts

{{sub-form}}

---

If you’re enjoying the newsletter, do me a solid and shoot this over to a friend or healthcare slack channel and tell them to sign up. The line between unemployment and founder of a startup is traction and whether your parents believe you have a job.

Healthcare 101 Starts soon!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 Starts soon!!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 starts soon!!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 starts soon!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Interlude - Our 3 Events + LLMs in healthcare

See All Courses →We have 3 events this fall.

Data Camp sponsorships are already sold out! We have room for a handful of sponsors for our B2B Hackathon & for our OPS Conference both of which already have a full house of attendees.

If you want to connect with a packed, engaged healthcare audience, email sales@outofpocket.health for more details.