Fitbits, Bundled Payments, and Rollercoasters

Get Out-Of-Pocket in your email

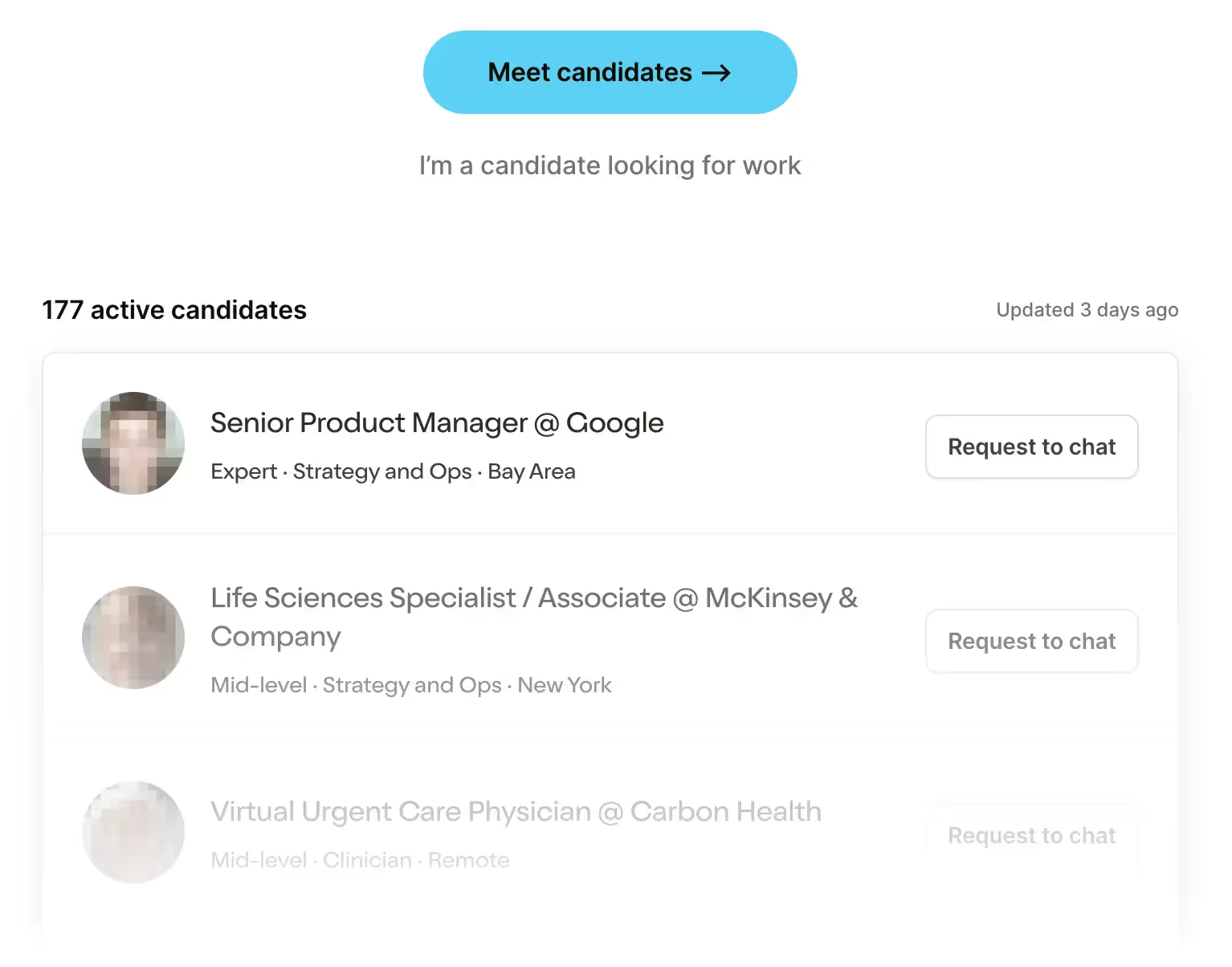

Looking to hire the best talent in healthcare? Check out the OOP Talent Collective - where vetted candidates are looking for their next gig. Learn more here or check it out yourself.

Hire from the Out-Of-Pocket talent collective

Hire from the Out-Of-Pocket talent collectiveIntro to Revenue Cycle Management: Fundamentals for Digital Health

Featured Jobs

Finance Associate - Spark Advisors

- Spark Advisors helps seniors enroll in Medicare and understand their benefits by monitoring coverage, figuring out the right benefits, and deal with insurance issues. They're hiring a finance associate.

- firsthand is building technology and services to dramatically change the lives of those with serious mental illness who have fallen through the gaps in the safety net. They are hiring a data engineer to build first of its kind infrastructure to empower their peer-led care team.

- J2 Health brings together best in class data and purpose built software to enable healthcare organizations to optimize provider network performance. They're hiring a data scientist.

Looking for a job in health tech? Check out the other awesome healthcare jobs on the job board + give your preferences to get alerted to new postings.

Here are some papers I like. They range from decentralized trial design, to hospitals playing games with bundled payments, to strapping cows into rollercoasters.

Throwing A Fitbit

Here is a paper about the design of Fitbit’s heart study.

The study is looking at whether or not Fitbits can detect atrial fibrillation (heart flutter) early enough to avoid a serious heart issue down the line.

This study design encapsulates almost everything about where research and care delivery is headed.

First let’s talk about enrollment. The study enrolled 455K+ people in less than 4 months, which is an almost laughably large scale. One of the reasons this was doable was because the trial was fully decentralized (no physical sites patients would have to go to). This also enabled much more geographic and racial diversity. Even though it’s not exactly representative of the population, the sheer size of the population studied means sub-population studies analyses can still be large.

Another reason enrollment was easier was because Fitbit already had distribution for recruitment. You can actually see how effective the in-app recruiting notification was, a testament to what you can do when you already have a relationship with end users.

Another thing you can do with an existing relationship is get data that was collected before the patient had signed up for the study. This is useful because if the study requires an intervention of some sort, you can compare the results against a pre-study baseline. Even if there isn’t a direct intervention, sometimes patients start “acting healthier” when they know they’re a part of the study and you want to control for that.

“After passing initial screening and consenting to the trial, participant Fitbit PPG data are collected and analyzed prospectively until a device detection occurs or trial completion. Up to 30-days of available retrospective data are analyzed at study start and participants are notified if a detection occurs during this retrospective period within 1-2 days of enrollment. After the retrospective data analysis, data are analyzed prospectively for the remainder of the subject’s participation.”

This study design also shows us what care delivery and remote monitoring will probably look like in the future.

- Instead of capturing data once in office, the Fitbit captures data through the course of the day including when you’re sleeping (it’s not creepy if it’s for science?). This makes it more likely to capture abnormalities and see if the abnormality shows up consistently or during certain times of the day, etc.

- If an abnormality is detected, the device notifies you and connects you to a physician via telemedicine (on PlushCare) who then sends you an ePatch (a single-lead ECG). Presumably you can even do this with 6-lead ECGs to increase accuracy as well.

- The provider reviews your ePatch results, and if it seems serious they encourage you to see your doctor in-person or connect you to a doctor if you don’t have one.

This is exactly what a proactive care delivery system should look like. Everyday devices for monitoring with lower but acceptable sensitivity/specificity, telemedicine consult if there are issues, higher sensitivity/specificity diagnostic tools to confirm, and referral to the right level of care after another telemedicine consult. This is especially helpful for diseases that patients don’t physically feel until it’s too late.

It’s funny to see some of the unexpected tricky parts that come up as proactive care workflows are built, which the paper talks about. For example, you need to be able to send a push notification to a person if an abnormality is detected. But you can’t actually have a push notification that says “yo you might be dying” in case someone else opens your phone screen, but you need something equally important looking...

“To reduce the risk of the incorrect person receiving information related to a potentially sensitive medical issue, the notification indicates there is a study related update which requires the participant to login with their Fitbit credentials to view.”

I have enough friends that refuse to update their apps regularly to know this is not effective lmao.

Fitbit’s trial design highlights how research and care delivery are blending together. Biobanks and virtual research studies are massive, new software-developer kits like ResearchKit are making it easy to spin up research via iPhones, and COVID spurred lots of healthy people to join studies to be monitored over long periods of time. It’s not inconceivable to think that a larger % of the population will be a part of studies in some capacity. It might even be a flex to say you’re taking part in research.

With more of that research including monitoring + some form of intervention, getting care and taking part in research will get more blurred.

This paper is just a look at the study design; the results have not been posted yet. There are still going to be some issues with this trial design (non-randomized, patients needed to have a Fitbit to join, etc.) but I thought it was cool either way.

Smoking A Joint (replacement) - The Fall Of CJR

Here is a super interesting paper that looked at spend in a value-based care model for joint surgery.

A little background first. For some types of care, you can generally define all the things that go into that “episode” of care. If you’re getting a lower-extremity joint replacement (LEJR) like a knee replacement, then there’s some pre-surgery consultation, the surgery itself, and the recovery + rehabilitation afterwards. Since you can define all the things that should typically happen in that episode, you can also estimate what it should cost and what “quality” care looks like. This is what “bundled payment” programs aim to do. For a given procedure that has a defined episode, we’ll reimburse as normal and at the end of the year we’ll compare what you spend vs. the target price. If you were below that amount and you hit certain quality metrics, you get a bonus. In “upside-only” models, there’s no penalty if you don’t end up coming under the target. In “upside-downside” models, there is a penalty but usually the bonus is also higher.

An example is the Comprehensive Joint Replacement (CJR) bundle that CMS put together. These lower limb surgeries are common among Medicare beneficiaries and are relatively routine/low-risk - a perfect candidate to test out a bundled arrangement. But there were a few additional quirks to this specific program:

- The benchmark used to figure out what the episode should cost was based on a blend of the individual hospital’s historical spend and regional spend for that episode, with the blend shifting towards regional spend over time. The point of shifting to regional spend is to bring expensive hospitals more in line with what spend looks like in the rest of their geography.

- There was no real risk-adjustment in this program. Usually if a hospital sees sicker patients that will be more expensive and complicated to treat, a value-based care program will factor that in when considering reimbursement or quality metrics.

- Initially the program was mandatory. Within a given geography aka. metropolitan statistical area (MSA), hospitals were split into higher spenders and low spenders. Then they were RANDOMIZED to be put into the CJR bundle, which is kind of rare for these programs.

- In year 2, this bundle shifted to an upside-downside risk model, where penalties were introduced if targets weren’t met.

- At the end of year 2, CMS made the program voluntary for half of the geographies.

- At the end of year 2, CMS also allowed total knee replacements to be done in outpatient settings BUT the outpatient surgeries were not included in the CJR bundle. Outpatient settings typically are meant for less complicated patients, are lower cost to deliver care, and Medicare pays less for them.

This sets up an interesting experiment. Low-risk patients would be more likely to help a hospital come below the target spend for the CJR bundle and thus get financial bonuses. Hospitals are now incentivized to push lower-risk patients into higher cost inpatient procedures. But hospitals wouldn’t do that...right?

On top of that, by changing things to voluntary for some hospitals you can see whether the typically high cost hospitals stay in the program or not. The goal of the program is really to target those hospitals to help them bring their spend down and save more money for Medicare.

So what happened? After the changes around outpatient surgery stuff and making the program voluntary the savings for the program dropped a ton in year 3.

The authors identify a few potential culprits for this.

The big one - high cost hospitals ended up dropping out when the program became voluntary. They were the biggest cost savers of the program, but also didn’t see it as worthwhile to try and bring their spend down just to be a part of the program. That sweet, sweet fee-for-service revenue is hard to quit.

.gif)

For the hospitals that had to stay in the program, they ended up shifting patients that should have been treated in outpatient settings and pushed them to inpatient settings. This did not happen in the voluntary group.

Pushing low-risk patients to the inpatient setting was one risk selection game being played, but another was also just avoiding sicker patients altogether that might end up being more expensive.

There’s a lot you could takeaway from this study, but for me personally:

- It’s cool that we can test programs like this with actual randomization, even if the result is less than ideal. We should do that more because we learn about how different variables incentivize different things, and now CMS is making changes to take findings like this into account.

- Making things both voluntary and regionally benchmarked is destined to have high spenders drop out of a program.

- Risk-adjustment is a very delicate balance. If you give too much risk-adjustment, then payers/providers are going to optimize for making patients look as sick as possible. If you give too little risk-adjustment, you’ll end up penalizing payers/providers that see sicker patients.

- I just kinda don’t think existing hospitals will ever take programs like this seriously when they get most of their money from fee-for-service and are so operationally complex that they would have to make a lot of changes to take advantage of these programs. Instead you need companies to build their workflows and business models NATIVELY to programs like this and get the majority of their revenue from these bundles/value-based care programs etc.

Great paper, a thorough experiment in seeing the trade-offs made in creating a bundled payment program, and cool to see so clearly how providers respond to different incentives.

Rollercoasters, Kidney Stones, and Backpacks of Pee

Okay this is a sort of a silly paper from a few years ago but it’s just too good not to share.

Researchers looked at whether a roller coaster could dislodge kidney stones.

The setting of the stage is great:

“Over several years, a notable number of our patients reported passing renal calculi spontaneously after riding the Big Thunder Mountain Railroad roller coaster at Walt Disney World’s Magic Kingdom theme park in Orlando, Florida. The number of stone passages was sufficient to raise suspicions of a possible link between riding a roller coaster and passing renal calculi. One patient reported passing renal calculi after each of 3 consecutive rides on the roller coaster. Many patients reported passing renal calculi within hours of leaving the amusement park, and all of them rode the same rollercoaster during their visit.”

So I guess somehow these docs are fitting in a patient’s entire amusement park ride history into a 15-20 minute visit, and noticing a pattern. So they did what any urologist with an NIH grant would do - they created a silicone renal model with a kidney stone, put it into a backpack full of pee, and rode the roller coaster multiple times in different spots (with presumably other children on the ride blissfully unaware of the backpack nearby) .

They actually iterated through a few ideas before landing on this one. In what seems to be a Mythbusters-themed brainstorming session, they tried doing this with ballistic jelly at first. And then also considered…strapping a cow or pig into the rollercoaster? And decided not to because it would be terrifying to children?

The kicker of the whole study is...the roller coaster actually does dislodge kidney stones lol. With the models they used, if you put them in the back seat of the rollercoaster (specifically for the Big Thunder Mountain ride at Disney). Even for large kidney stones which are typically hard to pass naturally - it works ~2/3rd of the time. Unfortunately the researchers didn’t look into what musculoskeletal disorders you’d get from doing the ride multiple times

In terms of cost-effectiveness, a lithotripsy to remove a kidney stone costs ~$7300. A day time pass to Magic Kingdom is ~$110. You can use the leftovers to do all the amazing other things that Orlando has to offer like...Epcot I guess.

So even though the premise of this study feels like a joke, it turns out to be a really practical and useful result. These are the kinds of tips that are shared anecdotally in patient communities that people aren’t sure whether to believe or not. I actually think there’s something interesting around running low risk studies on rumors that patients swear by, seeing if they work, and then validating to patients that it might be worth trying. I’ve also seen in forums that people have passed kidney stones doing yoga as well. Unfortunately since hospitals can’t bill for rollercoaster rides, they aren’t exactly incentivized to look deeper into this kind of stuff.

If you have any weird remedies like rollercoasters for kidney stones, let me know cause I’m genuinely curious to hear it.

Finally, if you want to see a video walkthrough of this study you can watch it here. Somehow this guy doesn’t crack a smile ONCE through this whole thing.

Thinkboi out,

Nikhil aka. “Kingda Ka will also cure constipation”

Twitter: @nikillinit

---

{{sub-form}}

If you’re enjoying the newsletter, do me a solid and shoot this over to a friend or healthcare slack channel and tell them to sign up. The line between unemployment and founder of a startup is traction (and whether your parents believe you have a job).

Healthcare 101 Starts soon!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 Starts soon!!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 starts soon!!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 starts soon!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Interlude - Our 3 Events + LLMs in healthcare

See All Courses →We have 3 events this fall.

Data Camp sponsorships are already sold out! We have room for a handful of sponsors for our B2B Hackathon & for our OPS Conference both of which already have a full house of attendees.

If you want to connect with a packed, engaged healthcare audience, email sales@outofpocket.health for more details.