How Should We Evaluate Healthcare AI? Some Thoughts

Get Out-Of-Pocket in your email

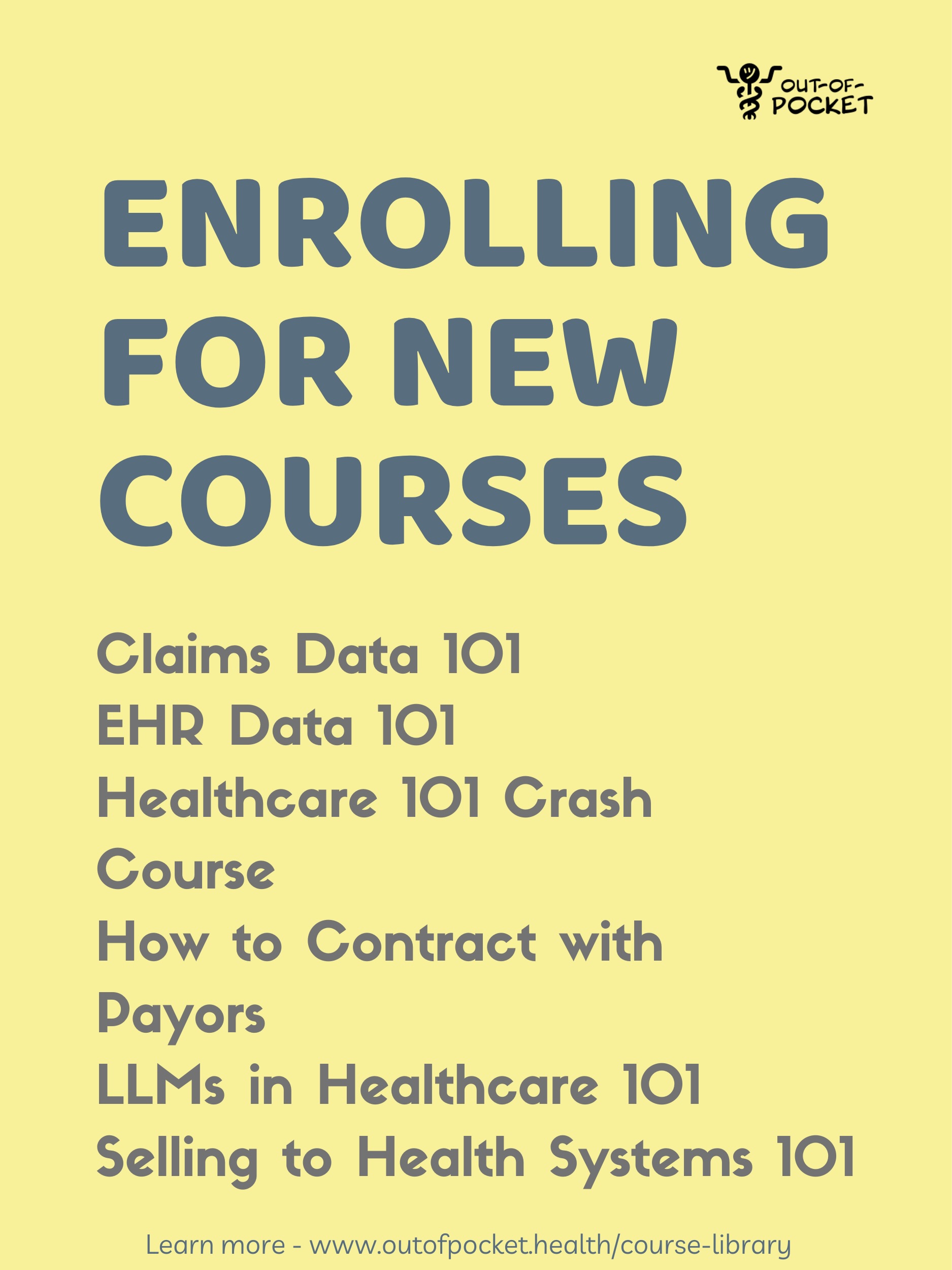

Looking to hire the best talent in healthcare? Check out the OOP Talent Collective - where vetted candidates are looking for their next gig. Learn more here or check it out yourself.

Hire from the Out-Of-Pocket talent collective

Hire from the Out-Of-Pocket talent collectiveHealthcare 101 Crash Course

%2520(1).gif)

Featured Jobs

Finance Associate - Spark Advisors

- Spark Advisors helps seniors enroll in Medicare and understand their benefits by monitoring coverage, figuring out the right benefits, and deal with insurance issues. They're hiring a finance associate.

- firsthand is building technology and services to dramatically change the lives of those with serious mental illness who have fallen through the gaps in the safety net. They are hiring a data engineer to build first of its kind infrastructure to empower their peer-led care team.

- J2 Health brings together best in class data and purpose built software to enable healthcare organizations to optimize provider network performance. They're hiring a data scientist.

Looking for a job in health tech? Check out the other awesome healthcare jobs on the job board + give your preferences to get alerted to new postings.

A New Microsoft AI Healthcare Paper - Some Context

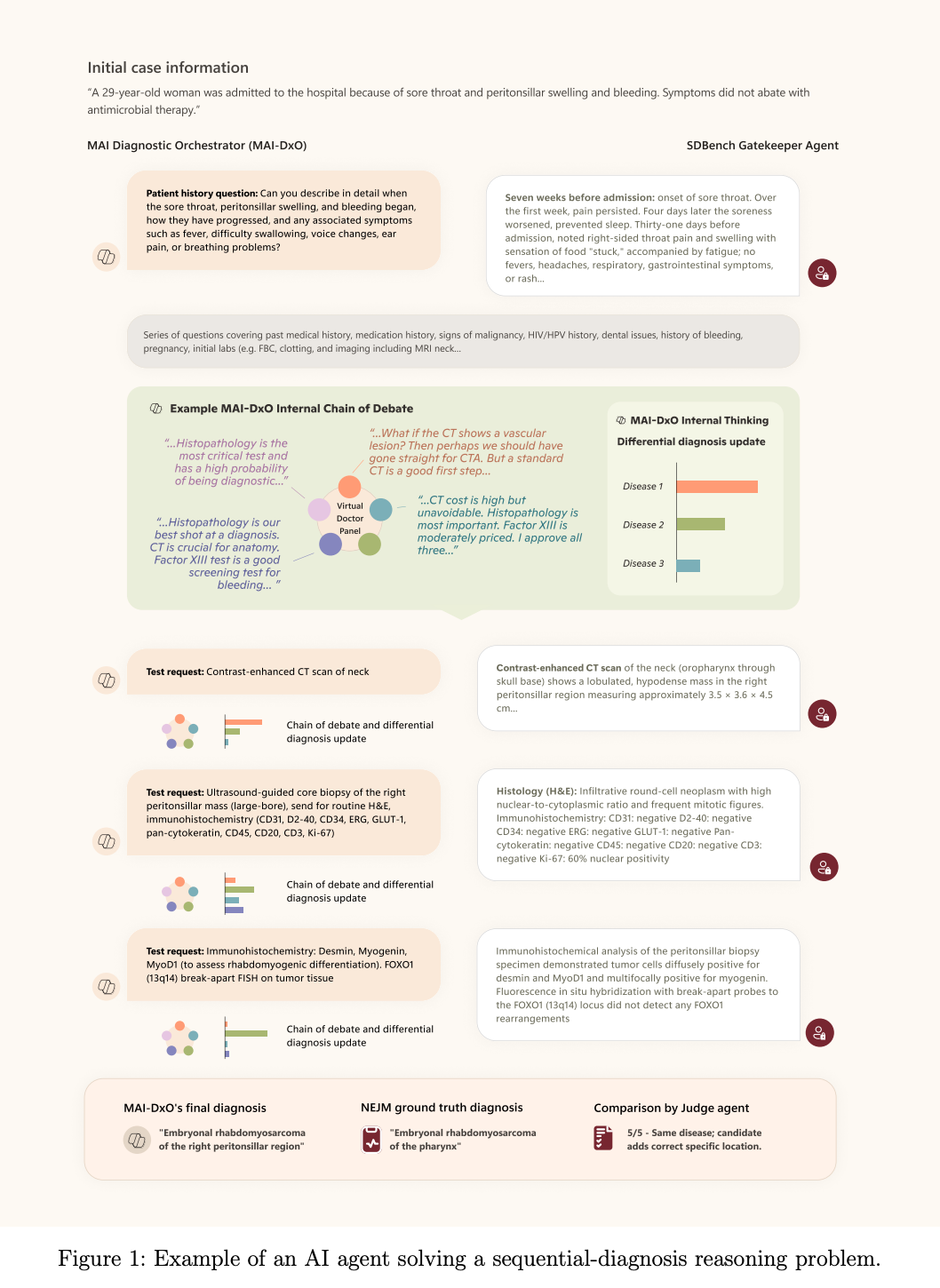

Microsoft’s AI team put out a paper recently that compared how AI did against doctors when looking at some medically complex cases from the New England Journal of Medicine.

A lot of AI models are compared against how doctors do on the USMLE licensing exam, which is also an Indian parent’s favorite pastime. But the USMLE is just multiple choice questions. It’s easy to train on publicly available exams that have an answer key and get AI to pick the best guess among five possible answers. But then you build an AI that overfits to doing well on the exam but doesn’t work well in the real-world. Also a gifted and talented kid’s favorite pastime.

In the case with the Microsoft paper, the interesting development is the AI uses a stepwise reasoning pattern that basically can take in new information and adjust the treatment pathway.

The model has to figure out the best next steps and takes different things into account - including how cost-effective the choice is. A payer’s favorite pastime.

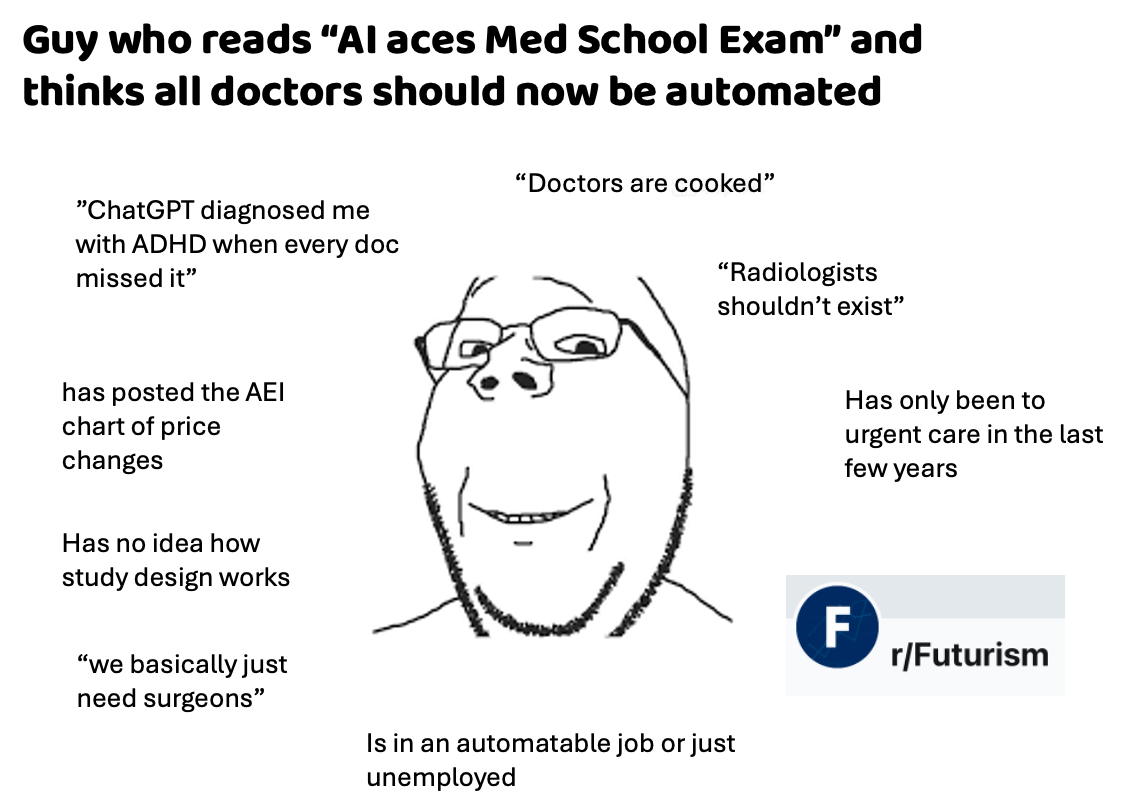

As you can imagine, the responses to the paper were all over the place

- The libertarian bunch talked about how doctors will get replaced. The model did well, correctly identifying up to 80%+ of them which was much better than the physician group. The doctors talked about how these cases don’t represent reality.

- A lot of people asked why this was being tested in some of the most complex cases vs. more everyday presentations of patients.

- People were generally impressed that the reasoning steps actually do mimic what many doctors do in a workup.

- A lot of tweets started with “BREAKING: something sensationalist” and made me want to find the nearest bridge.

I’ve been thinking about AI evaluations and had a bunch of conversations about this with people recently. I love talking. A few thoughts.

{{interlude 5}}

Should Patients Be Bringing In More Data?

Everyone (doctors on LinkedIn) talks about how real patients aren’t like the vignettes in these papers. But…maybe our goal should be making the visits as similar to the vignettes as possible.

You’d do this by getting as much information as possible about the patients from any source available, without the patient needing to remember all the details themselves. Things like:

- Making it easier for providers to pull data from health information exchanges across states and parsing the information so that only the relevant stuff is there

- Being able to share relevant ChatGPT history with your doctor for insights into the progression of your disease or can pull /history from years ago (e.g. they asked about X medication side effects 3 years ago, they were on that med)

- Creating collaborative ChatGPT-esque workspaces between you and your doctor where autonomous agents handle triaging/intake/monitoring and the doctor can see a summary of what happened

- Wearables and home hardware that can more passively monitor conditions so the patient doesn’t have to remember metrics

- Alexas, apps, or automated calls that ask the patient about how they’re feeling and progression of their symptoms on a regular basis and synthesize that into the record

Smush this all together and it should look like the vignettes they test these models on.

The historical argument has been that having all of this data places work on the clinicians that’s unnecessary. Data from different places comes in different formats, so they need to work to stitch it together. Or most of the data is not going to be useful for the doctor when so much of it is junk or inactionable, so it just bloats everything.

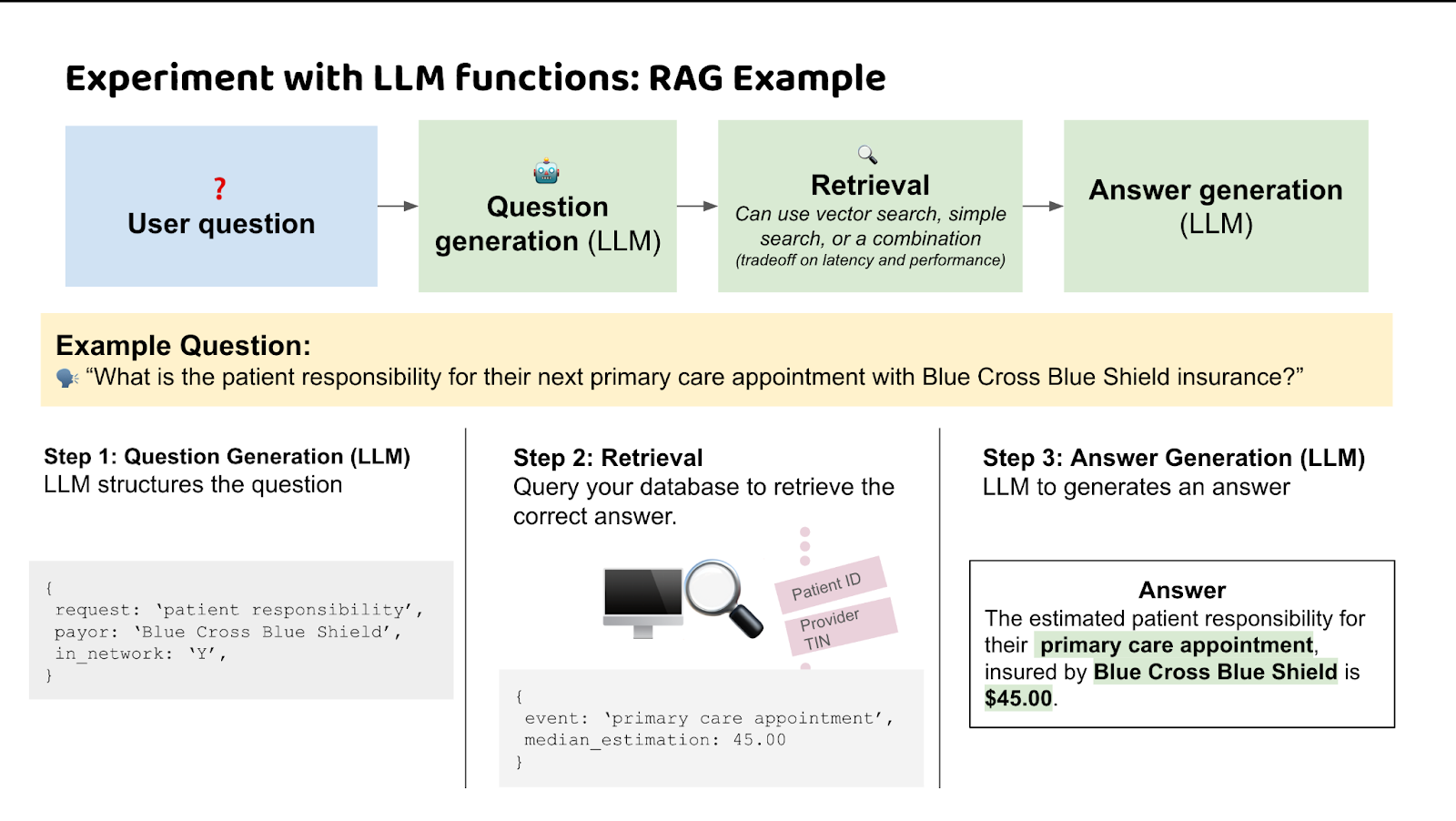

However the great part is that AI now can act as a good synthesis tool. Semantic interoperability can take data from different places/formats and connect them based on meaning. Contextual Retrieval Engines can take contextual data about the patient and only surface the anomalies or areas to dive deeper into. The barrier is no longer interpretation.

[Pst, we talk about this in our upcoming LLMs in healthcare course, which starts 9/8.]

We’re far away from this today, but there’s a line of sight into how you might make an incoming patient look more like a vignette even if it’s not perfect.

AI For Simple Conditions Will Have A Bigger Impact

It’s clear that AI will eventually be able to help with complex conditions AND really simple ones. Complex conditions make sense! They usually require synthesizing data across a bunch of different places (both from the patient and research perspective) in ways that will far exceed the capacity of any one doctor. Plus, most doctors might only see a presentation of that case a handful of times; AI has “seen” it tons of times.

I can understand why studies look at complex cases. There’s immediate utility as a decision support for doctors, it can slot into existing workflows easier, and it’s a way to show off how the advanced model performs.

But personally I think the more interesting area is AI that can autonomously handle the really basic cases with no doctor in the loop (including ordering labs, prescribing low risk drugs, etc.). This is for a few reasons:

- There’s more consistency on what should be done for simple cases, so theoretically it should be easier to evaluate.

- If you can actually show the AI performs just as well in simple routine cases, we might be able to roll out autonomous doctors for certain types of visits. Yes, a lot more needs to be figured out (liability, escalation for urgent issues, etc.). But this would represent a total restructuring in how people get care.

- Simple routine cases are applicable for a much larger swath of the population than the complex cases that show up in NEJM. Those simple cases are where patients feel most of the frustration. If I already know what the issue is because I’ve had it many times before, why do I need to go see the doctor, wait, etc.

- The US is already pretty good at complex care! It’s actually the area we tend to shine. Plus, doctors are already going to be using these tools for complex care regardless of what these studies say.

Designing A Study To Test Healthcare AI Is Hard

Figuring out how to set up a study to evaluate AI is really hard! You need to figure out:

- How much data and what tools do the AI and doctors have? In the Microsoft paper, for example, the physicians couldn’t use external sources like Google or medical info sites. Just rawdogging pathophysiology like a madman. Or, if the AI is looking at cases retrospectively, it can “peak” into future labs/labels, etc. which can distort the results.

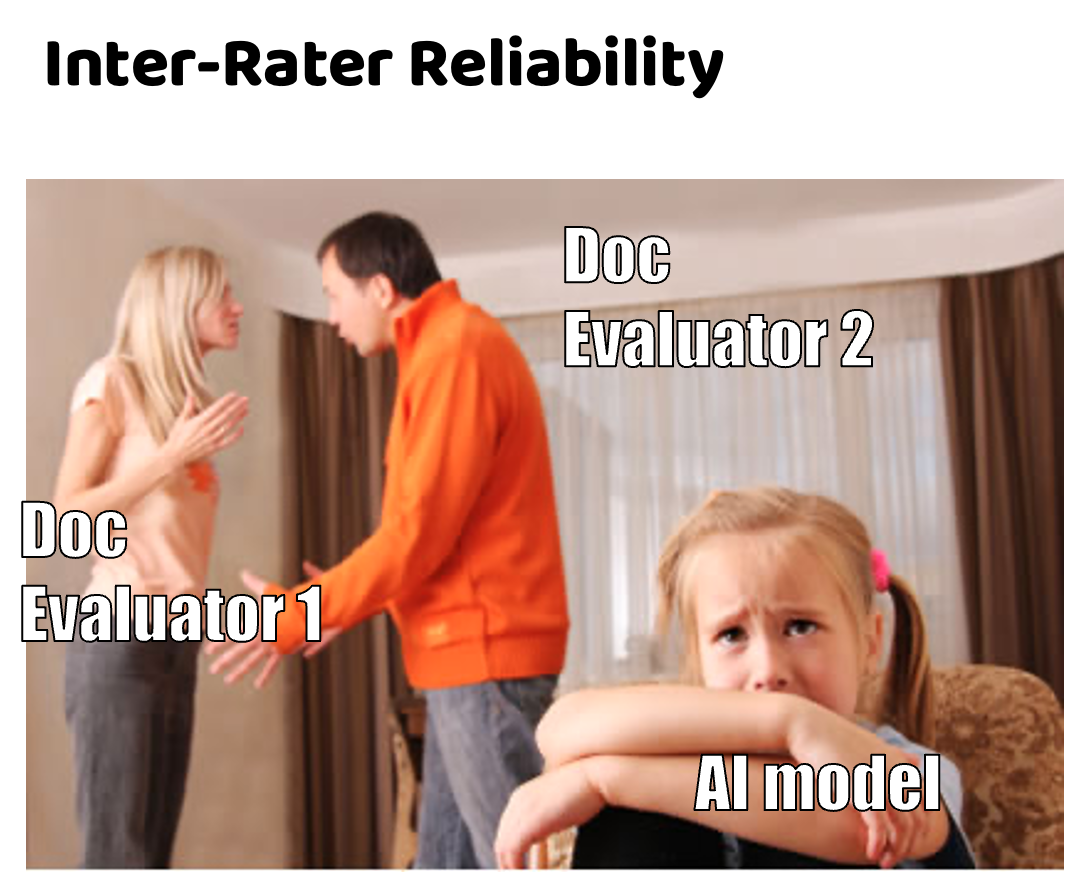

- What is the ground truth of the correct answer? Docs frequently disagree on cases; which docs do you pick to review the cases on correctness? As an example, in the OpenAI study with Penda Health in Kenya - two physicians looking at the same case disagreed 25-33% of the time!

- If you incorporate aspects like cost-effectiveness, it will change downstream choices. It’s the same tradeoffs you have to think about in the real-world, which we don’t even have consensus on today.

- What does the modality look like? Do you tell patients they’re being seen by an AI? That might change their interactions. Do doctors see the AI answers before they give their decisions? That might influence them.

I’m interested in other types of study designs that might be able to test this for future. For example, can you use an AI scribe recording a visit that a regular doc uses, and then measure concordance with the doc? Could you look where there was discordance with the doc but the AI ended up being right? If the scribe agrees with you, it’s documentation. If it doesn’t, it’s “for quality training purposes.”

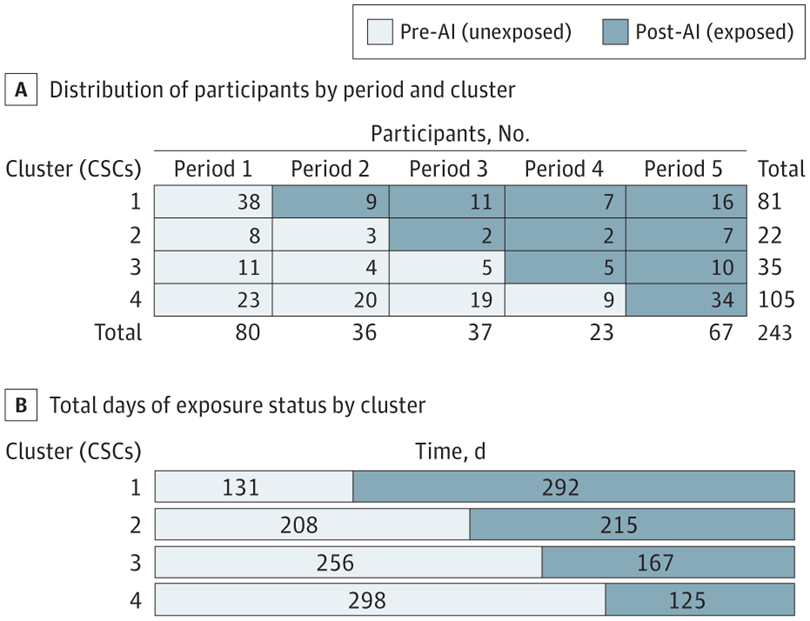

Felix Baldauf-Lenschen proposed one design inspired by Viz.ai’s paper to test their stroke detection algorithm. I thought it was interesting:

“This is the kind of study / cluster randomized trial I would love to see:

- N physicians in the emergency department

- Randomize physicians 1:1 into control and interventional arm, in which only the interventional arm can use AI scribe / diagnostic AI tool for patient encounters

- Randomize new patients presenting to the ED 1:1 to physician arm

- note: for a given patient during the study, try to limit interactions between physicians that are in different groups to isolate the potential effect on outcomes

- After 1 month, swap the groups so that the physician control arm now gets to use the product and the original interventional arm does not

- Outcome measures by physician trial arm:

- Inpatient admission rate

- ICU admission rate

- 30-day mortality rate

- Readmission rate

- Length of stay / time to discharge

- Patient wait times

- Readmission rate

If a new product is truly transformative (which I believe and hope some of these tools will be), then you should be able to see that via improvements in actual patient outcomes and hospital throughput/efficiency.”

AI Mistakes vs. Human Mistakes

How should we compare AI mistakes vs human mistakes? Doctors make mistakes all the time, but it’s not like we remove the medical license of a doc if they make a few mistakes. The bar for AI is way higher - it has to be nearly perfect and tested in every possible scenario. As soon as it makes a few mistakes at the edges, we feel uncomfortable about putting it into production.

It also raises some questions around whether “concordance with what the doctor said” is really the metric to measure. What if it’s being compared to a bad doctor? We probably wouldn’t want them to get the same answers then.

There’s probably a few reasons around this. We have a liability framework for doctors making mistakes in the form of malpractice. Doctors have a code of ethics rule, review boards, and social feedback that push them to do the right thing. We’re still figuring out how to deal with liability if AI gets something wrong and what motivates them to do the right thing. Why does mine keep agreeing with me? (Other than the fact that I’m right).

But if AI is already better than the bottom quartile of docs who are consistently getting simple cases wrong, we should already be getting this out there? Wait but how do we identify who’s in the bottom quartile of docs? Well, that’s other docs favorite pastime

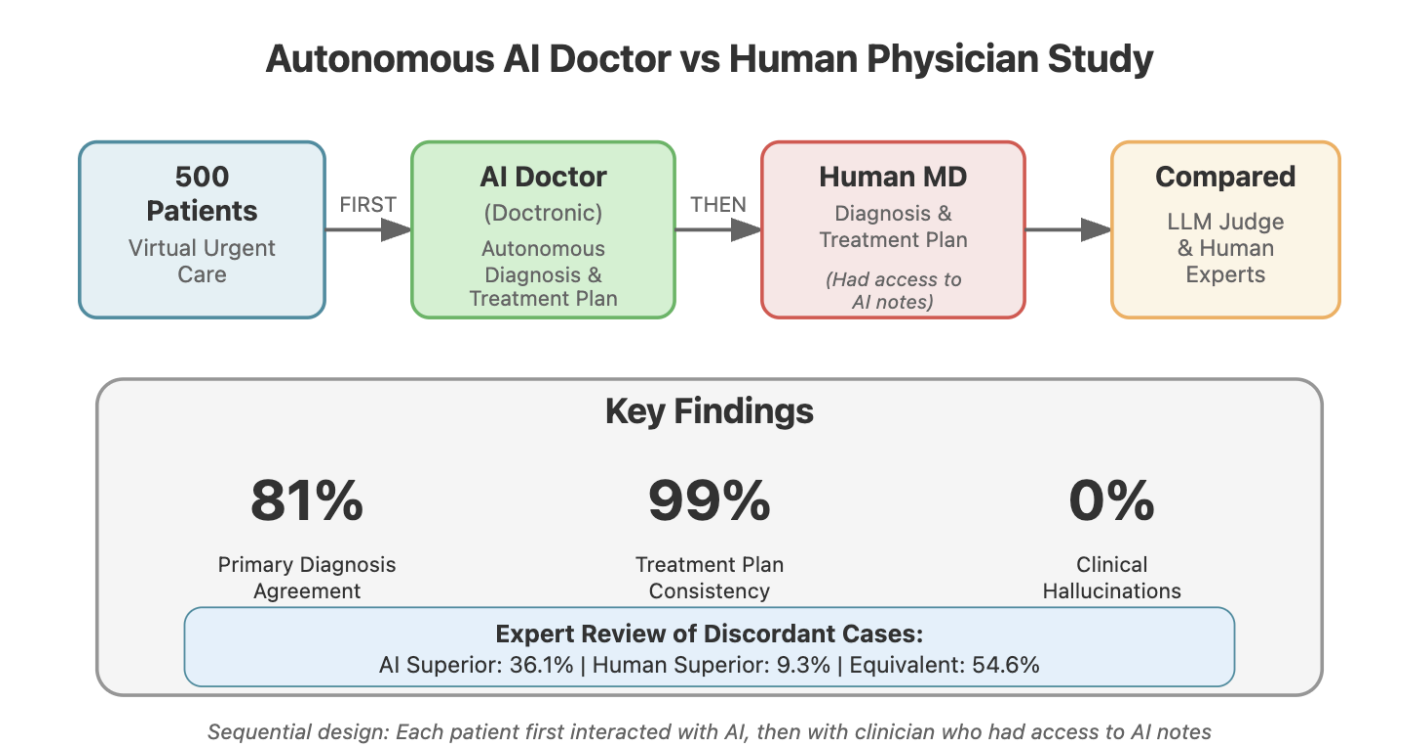

A Parallel Universe Paper - the Doctronic paper

While the Microsoft paper looked at complex cases, in tandem, a new preprint from Doctronic looked at whether their AI agents could autonomously handle common urgent care cases in real-world settings. Sure it was retrospective, and the doc saw the AI notes which could influence them.

But the performance was pretty good! The treatment plan was pretty much identical as what the doctor would order, and in areas where the AI and humans disagreed, a reviewing clinician found the AI to be better in a large chunk of those cases.

It’s cool to see these agents being used in more of a real-world setting, with simpler cases, and a potential path to actually create autonomous visits. Doctronic seems to be trying to use these autonomous visits to scale up patient panels per doc and reduce the price of the visit to cash pay prices.

But the question it does not answer is liability. The most likely liability framework here is a doc taking on the liability if the AI is wrong but also getting the upside for seeing 10x more patients if the bot can handle all the simple cases.

Conclusion - Why Do We Even Do These Studies?

One of the debates I’ve been having with people is…what’s even the point of doing these papers? It’s pretty clear that AI is going to permeate and change medicine. When the internet came out, we didn’t do studies to see how the internet would change care before rolling it out. A lot of docs and patients are going to use this tool either way, these studies aren’t going to stop them.

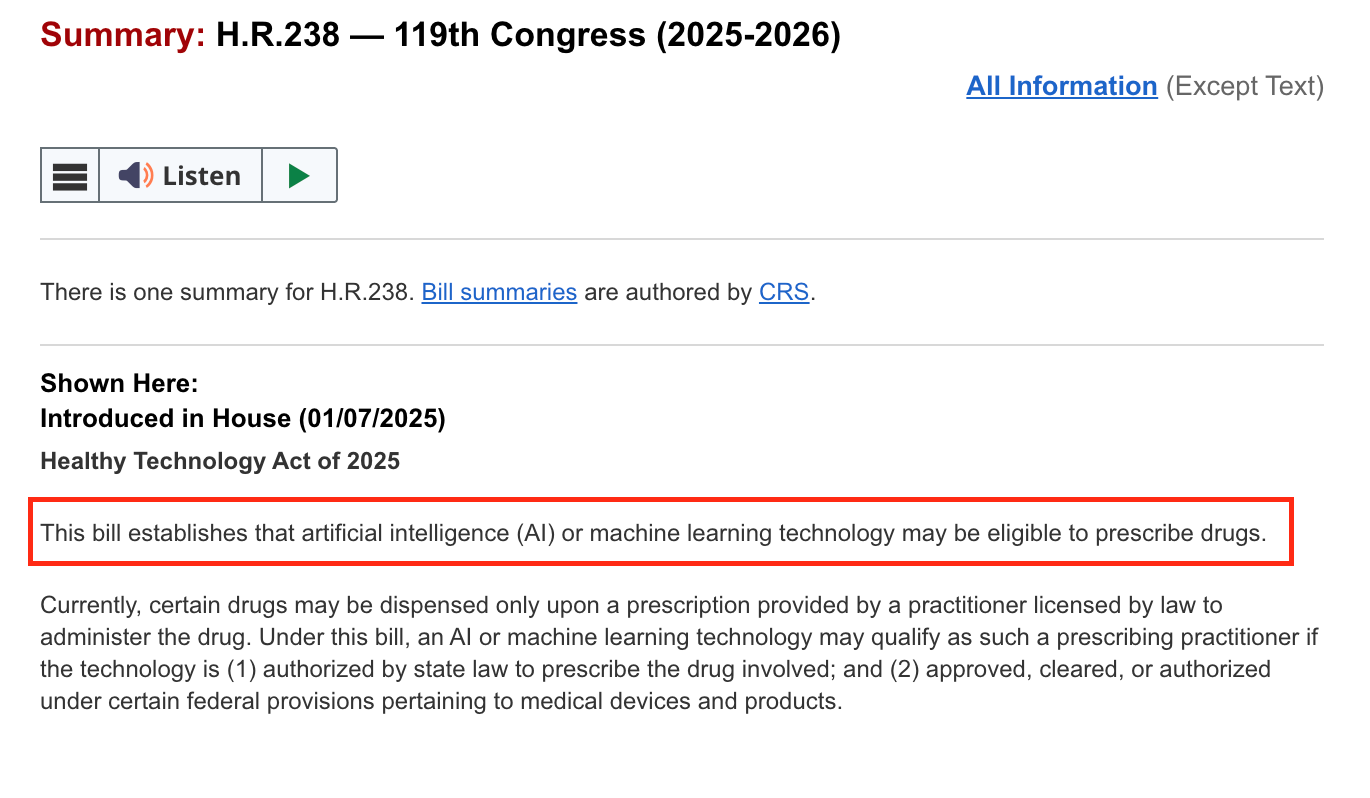

While I think that’s true, the main reason to do these papers is to figure out what medical authority we want to give to AI. Should we let it prescribe medications? Should we let it order labs? Should it become a mandatory second opinion before treatments? Should payers give it for free as a triaging tool? Where should we assign liability now that we know where mistakes tend to occur? Doing these papers helps us answer questions like this.

Each additional paper teaches us something new about where AI can be deployed and what powers we want to give it. This is how AI will materially change the workflow of providers and how patients get care in the first place.

Plus, they’re just cool papers. Bots vs. humans is the fight we’re all going to face anyway, might as well understand their weaknesses now so we can fight back.

Thinkboi out,

Nikhil aka. “Eval Eye” aka. “Just one more paper bro, we’ll solve healthcare AI with one more paper”

Thanks to Payal Patnaik and Hashem Zikry for reading drafts of this.

Twitter: @nikillinit

IG: @outofpockethealth

Other posts: outofpocket.health/posts

{{sub-form}}

If you’re enjoying the newsletter, do me a solid and shoot this over to a friend or healthcare slack channel and tell them to sign up. The line between unemployment and founder of a startup is traction and whether your parents believe you have a job.

Healthcare 101 Starts soon!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 Starts soon!!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 starts soon!!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Healthcare 101 starts soon!

See All Courses →Our crash course teaches the basics of US healthcare in a simple to understand and fun way. Understand who the different stakeholders are, how money flows, and trends shaping the industry.Each day we’ll tackle a few different parts of healthcare and walk through how they work with diagrams, case studies, and memes. Lightweight assignments and quizzes afterward will help solidify the material and prompt discussion in the student Slack group.

.png)

Interlude - Our 3 Events + LLMs in healthcare

See All Courses →We have 3 events this fall.

Data Camp sponsorships are already sold out! We have room for a handful of sponsors for our B2B Hackathon & for our OPS Conference both of which already have a full house of attendees.

If you want to connect with a packed, engaged healthcare audience, email sales@outofpocket.health for more details.